Understanding Your AI Role in ISO 42001 Certification

ISO Certifications | Artificial Intelligence | ISO 42001

Published: Oct 24, 2024

Last Updated: Feb 25, 2026

Since its publication in December 2023, business leaders are still wrapping their heads around the ISO 42001 standard. The framework is designed to help any organization who provides, develops, or uses artificial intelligence (AI) products and services to do so in a trustworthy and responsible manner, guided by the requirements and safeguards that the standard defines—including clearly defining your AI role.

While this new framework for an AI management system (AIMS) does bear some familiarity with other management system standards (MSS) in that it’s structured around clauses 4-10 with an annex of controls designed to mitigate identified risks, it does feature one unique component—to achieve compliance with ISO 42001, you must determine your role with respect to the AI systems within scope.

Such a distinctive requirement deserves some closer attention, and as an experienced ISO Certification Body, we’re going to help break it down for you. In this blog post, we’ll delve into the different AI roles and how you can determine yours so that when you move forward with ISO 42001 certification, you’ll be able to navigate this requirement more easily.

The Importance of Your AI Role in ISO 42001

If you’re currently or have previously been ISO 27701 certified, this concept may seem a little familiar as this standard also has a requirement for organizations to determine their role. Being a standard focused on privacy, ISO 27701 asks organizations to define roles based on how they interact with personally identifiable information (PII)—whether that be in a Processor and/or Controller role.

In both cases, correctly defining this role is crucial, as properly establishing this organizational context and scope will be key in setting your organization up for success during initial certification—from determining the applicability (and the extent of the applicability) of the requirements and controls within ISO 42001 to performing the risk assessment through the correct lens/point of view to establishing appropriate organizational objectives as it relates to the AIMS.

What are the Different ISO 42001 AI Roles?

In ISO 42001, the mandate for determining your AI role is contained within one of the foundational clauses (4.1), which is more generally focused on the identification and establishment of the influencing factors—e.g., internal and external issues—that affect your ISO 42001 scope and, therefore, its ability to achieve the intended results/objectives of the AIMS.

In our experience, organizations generally scope their ISO certifications around customer-facing systems/services since it’s usually their customers who request the organization to become certified. That being said, you are free to scope your management system more broadly as well.

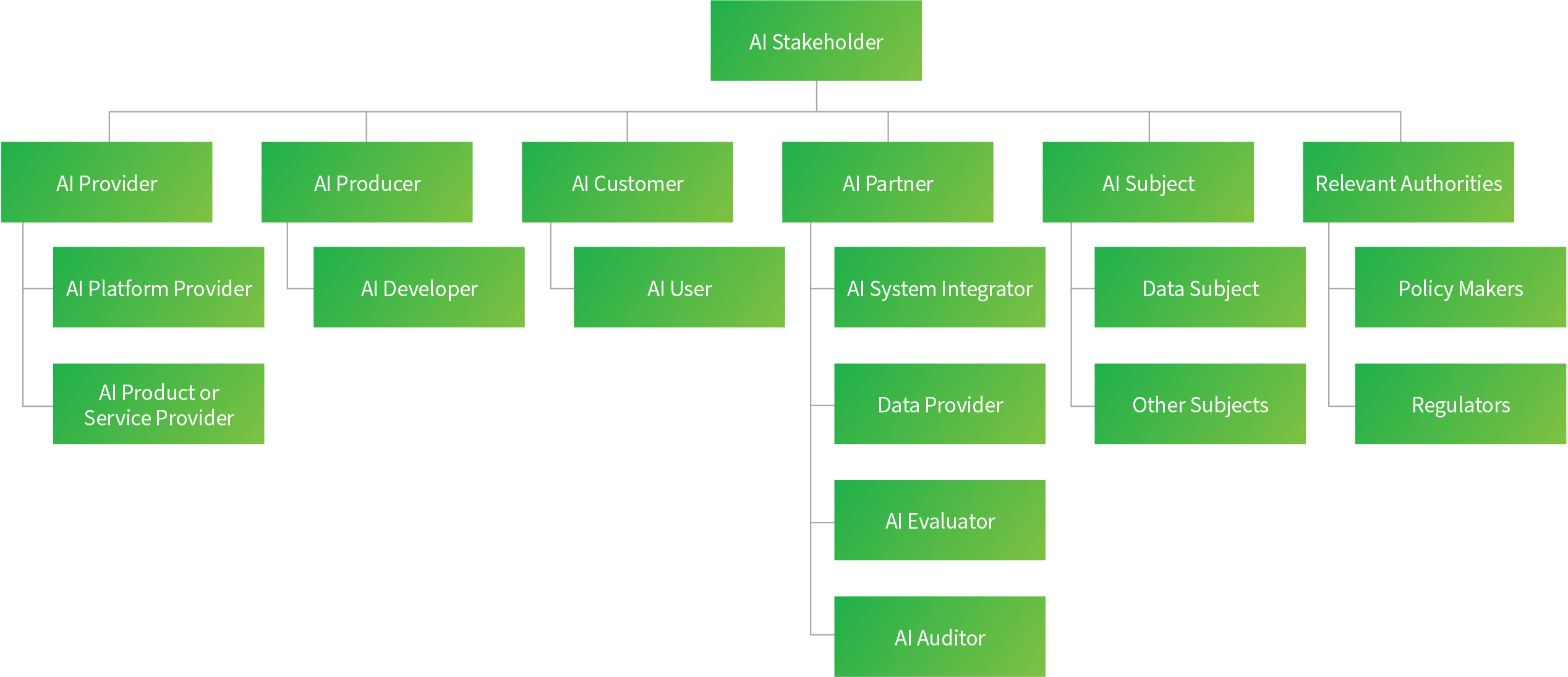

Now then, to determine your role, you should first familiarize yourself with the different options, and you can do that by referencing the detailed description of AI roles within ISO 22989—a standard that establishes terminology and describes concepts in the field of AI. Here’s a high-level figure of all of the different AI roles defined within that document:

AI Producer vs. AI Provider in ISO 42001

At Schellman, we believe the majority of our clients—being service providers (e.g., software-as-a-Service (SaaS) providers, managed service providers (MSPs), data center and cloud hosting providers, etc.)—will sit in the AI Provider role, but there are also circumstances where those organizations could be classified AI Producers as well.

Below are more details on those two categories, and their differences—AI Producer and AI Provider—including important nuance regarding the latter:

|

Role |

Details |

|

AI Producer |

ISO 22989 Definition: AI Producers are broadly defined as “an organization or entity that designs, develops, tests, and deploys products or services that use one or more AI system.” Translation: AI Producers are AI developers, or those organizations that are designing, developing/building, and/or training AI services and products (e.g., creators/designers of AI models/datasets, and/or algorithms; model implementers; model verifiers, etc.) and are often upstream of the Provider. Example: Organizations like OpenAI, Anthropic, Google DeepMind, AI21 Labs, AWS, and Mistral AI would all fall into this category. |

|

AI Providers encompass both AI Platform Providers and AI Product or Service Providers, ISO 22989 Definition: AI Providers are broadly defined as “an organization or entity that provides products or services that uses one or more AI systems—so, the role of “AI Provider” encompasses AI Platform Providers and AI Product or Service Providers.” |

|

|

AI Provider – AI Platform Provider |

Translation: AI Platform Providers provide services that enable users to produce AI services and products. Example: Google Cloud’s AI Platform, Amazon SageMaker, among others—i.e., providers of platforms that can be utilized for organizations to build, train, and deploy machine learning (ML) models—would be considered AI Platform Providers. |

|

AI Provider – |

Translation: The role of AI Product / Service Provider would apply to:

Example: Organizations that provide SaaS offerings that utilize AI to execute certain tasks, whether such AI is developed internally or leveraged from third-party sources. |

So then, organizations that are both developing and/or training AI models and providing such technologies as a component of their service offerings to end-users would be considered both AI Producers and AI Providers (AI Service/Product Providers), so it is possible that your organization could qualify as multiple of these aforementioned roles.

AI Producers are considered part of the AI Provider’s supply chain; therefore, the Provider must ensure the Producer’s practices are trustworthy (refer to Annex A control domain A.10). There is a shared responsibility model here as well (much like we see in cloud service providers and cloud service customers) - whereas AI Producers are accountable for quality and behavior of developed AI system components or models and AI Producers fully accountable for AI system use and compliance as they would have primary responsibility for system performance, ethics, and compliance.

The AI Customer Role in ISO 42001

Given that nuance within the AI Provider role, you may be wondering how the AI Customer role potentially fits in—here's the difference.

If an organization is leveraging AI from third-party sources—e.g., OpenAI—they are technically an AI Customer of OpenAI, and if they are using OpenAI’s GPT technology to integrate into the services they provide to their clients, they would now fall into the AI Provider category (as a Product/Service Provider).

But if—as in this scenario—you’re defined as both roles, what does that mean for your ISO 42001 certification? You’ll likely want to focus on your AI Provider role, given that's the downstream role that directly interacts with your customers and it’s those customers/end-users who are most concerned about the trustworthiness of the AI system they’re directly interacting with. Here’s why: If you are both an AI Customer and Provider and focus your ISO 42001 scope on the latter role, there's an Annex A control objective (A.10) that focuses on how organizations ensure that its usage of services/products provided by suppliers aligns with their approach for responsible use and development of AI systems—so, it would cover your AI Customer relationship.

Compare to ISO 27017

ISO 27017 similarly distinguishes between cloud service providers (CSPs) and cloud service customers (CSCs) with separate extended controls and implementation guidance for each.

As defined by ISO 27017, CSPs—e.g., AWS—are suppliers of services to their customers (CSCs, or acquirers). However, a CSC can also act as a CSP if it’s in turn building a SaaS application on top of AWS cloud infrastructure to an end-user (i.e., the CSC’s customers).

In our experience seeing organizations align their ISO 27001 certifications to ISO 27017, they do so in the CSP role since that is the downstream role where their services directly interact with their customers—the end user—as opposed to the upstream relationship with their CSP.

The AI Partner, Subject, and Relevant Authority Roles in ISO 42001

We’ve noticed that the AI Partner, Subject, and Relevant Authority roles have been a bit more rare in their applicability, nonetheless they are established roles in ISO 22989, so we will provide some context on when those roles might be applicable to an organization pursuing ISO 42001:

- AI Partners would be organizations providing products/services supporting the operation of an AI system but don’t have full control over its behavior. Examples might be vendors of AI system components (APIs, datasets, libraries), third-party service integrators, and consultants/contractors aiding in the development, deployment, or monitoring of the AI system. Companies that provide data to train AI foundational models, which help AI Producers (Developers) improve their models, such as Scale AI, would be candidates for the AI Partner role. AI Partners are therefore a component of the AI supply chain – they are not responsible for the full behavior or output of the AI system but contribute to its capabilities.

- AI Subjects are individuals or groups that are subject to processing by an AI system or are affected by its outcomes. Examples might include customers or users interacting with an AI system (GPT users), individuals whose data is processed by an AI system, as well as employees, citizens, or patients impacted by AI decisions (credit scoring, hiring, healthcare diagnostics, etc.).

- AI Relevant Authorities play a crucial role in AI governance, risk mitigation, and ensuring responsible AI use – which includes alignment with applicable laws, industry standards, and ethical principles. Examples of external Relevant Authorities might include lawmakers and those with legal and jurisdictional regulatory oversight authority of AI systems. Internally, this can include compliance officers/chief AI officers, ethics boards, or AI governance committees.

In our experience, the AI Partner role certainly could have applicability for an ISO 42001 certification, though it may be of limited extent given they would have more limited accountability and responsibility for the overall AI system when compared to AI Producers and AI Providers.

AI Subjects and Relevant Authorities would certainly be considered when establishing the overall organizational context for an ISO 42001 certification. For example, the European Commission is a very relevant authority for organizations that operate within the EU given they are the organization that would enforce the EU AI Act. Because they seem to be more applicable as interested parties to the AIMS (as defined by ISO 42001 clause 4.2), they would be considered when establishing the scope of the AIMS and performing certain required management system activities, such as the AI system impact assessment, rather than candidates for ISO 42001 certification themselves. This is because they would play more of a role in influencing the direction of implementation of an AI Provider, Producer, Customer, or Partner AIMS.

Next Steps for Your ISO 42001 Certification

Now that the standard has been published, many organizations are beginning preparation for ISO 42001 certification in the interest of proving their artificial intelligence can be trusted. One crucial aspect of preparation efforts—and overall compliance—will be to establish your role concerning the AI systems in scope, and now that you know a bit more about it, that should be easier to accomplish.

As you do get started in building your AIMS, keep in mind that ISO 42001 was designed with a harmonized structure that allows for integration with other MSS such as ISO 27001, ISO 27701, and ISO 9001—regarding these three in particular, they’re referenced several times within the ISO 42001 standard as there are unique tie-ins to AI risk management from a security, privacy, and quality perspective. So, if you’re already certified against one of those standards, it may benefit you to investigate the potential benefits of integration and the enhanced alignment that comes with it.

To learn more about those nuances—or to explore a potential ISO 42001 gap assessment to gain more confidence ahead of certification—contact us today.

In the meantime, discover other helpful ISO 42001 insights in these additional resources:

About Danny Manimbo

Danny Manimbo is a Principal at Schellman based in Denver, Colorado, where he leads the firm’s Artificial Intelligence (AI) and ISO services and serves as one of Schellman’s CPA principals. In this role, he oversees the strategy, delivery, and quality of Schellman’s AI, ISO, and broader attestation services. Since joining the firm in 2013, Danny has built more than 15 years of expertise in information security, data privacy, AI governance, and compliance, helping organizations navigate evolving regulatory landscapes and emerging technologies. He is also a recognized thought leader and frequent speaker at industry conferences, where he shares insights on AI governance, security best practices, and the future of compliance. Danny has achieved the following certifications relevant to the fields of accounting, auditing, and information systems security and privacy: Certified Public Accountant (CPA), Certified Information Systems Security Professional (CISSP), Certified Information Systems Auditor (CISA), Certified Internal Auditor (CIA), Certificate of Cloud Security Knowledge (CCSK), and Certified Information Privacy Professional – United States (CIPP/US).